Deploy your Python code to AWS Lambda using the CLI

How to get started creating and deploying a Python package

Why am I doing this?

The AWS provided tutorials are too much stuff. Here I have simplified it. Plus I needed to deploy some code.

This is a continuation of my article on getting started with AWS Lambda.

What you will need

- the AWS CLI

- some Python code

- an AWS acount

The AWS CLI

You will want to install the AWS CLI. On Windows 10, I enabled the Ubuntu sub-system and just used apt to install it, but if you don't have that, click this to find out more.

Once you have it installed you will need to configure it. This involves setting up a user and key in the IAM so you can get access. Follow this guide for help.

Some Python code

You can find my code on my Github.

I needed some code to run. But what? I know! I'll copy the s3-get-object-python blueprint code from the AWS console and just make changes to it. Done.

The blueprint code doesn't do much. When it gets an S3 event it will print and return the MIME-type of the file. Boring. So I added a couple more lines of code to write a file to a different S3 bucket. Exciting. The boto3 SDK makes this really easy.

Checkout the code on my Github..

Before you deploy your code

You need to setup some things first. You need a couple of buckets and an IAM Role with rights to execute the function, read and write to S3 buckets, and access to the CloudWatch logs.

Two buckets

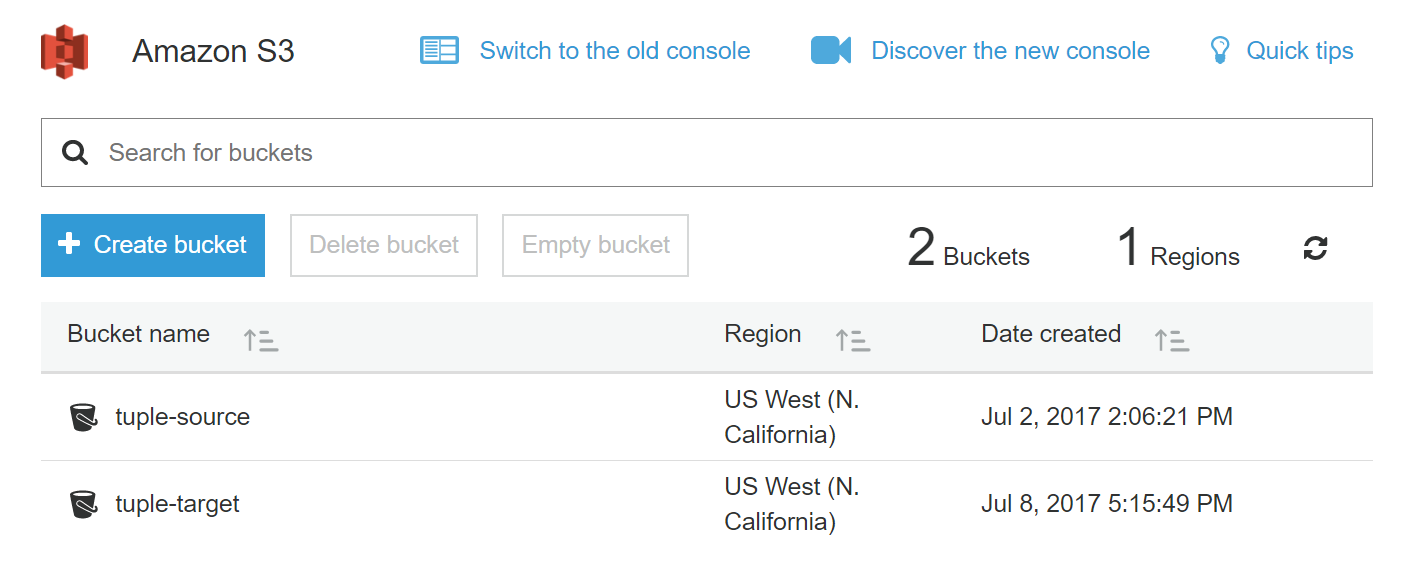

Go to the AWS S3 console and create two buckets. One bucket is the source. This is where we will upload files to process. The other bucket is the target. This is where the output will end up.

NOTE: your lambda function has to live in the same region as your bucket. So make a note of the region you used for your buckets.

I made mine look like this.

Two policies

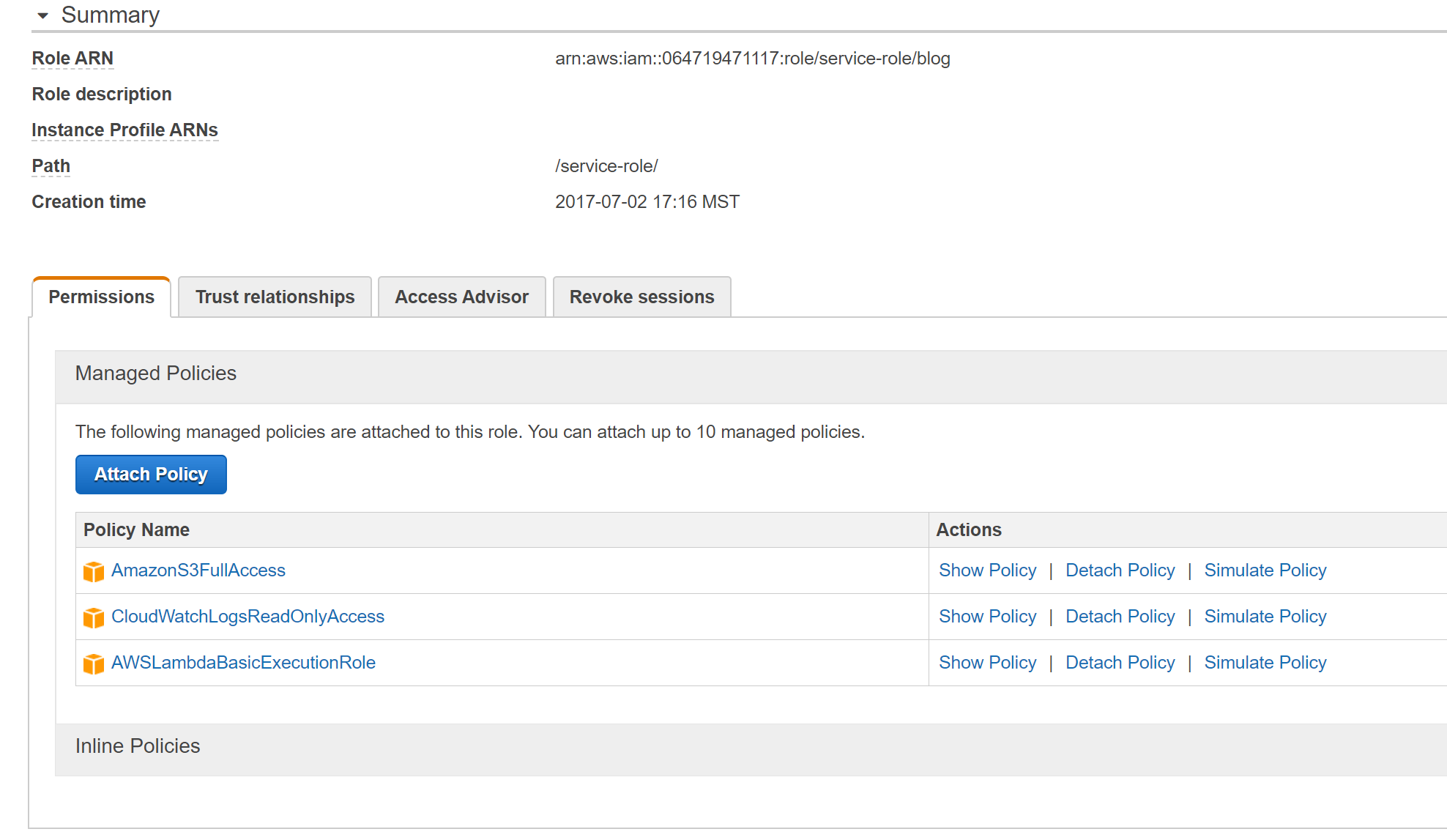

Go to the AWS IAM console and create a new role. And then attach these two policies:

- AmazonS3FullAccess

- AWSLambdaBasicExecutionRole

- CloudWatchLogsReadOnlyAccess

Note the ARN for the role you created because you'll need that later.

Bundle it up for deployment

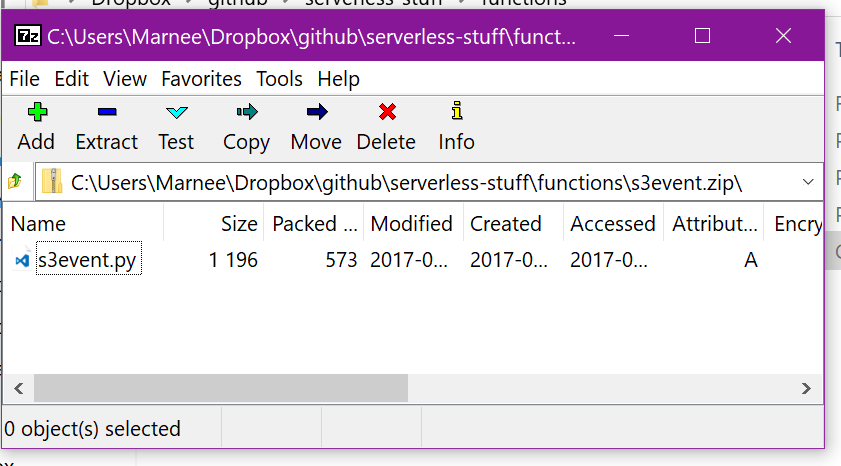

To use the CLI you need to zip up your code. If you do not have dependencies other than Python and boto3, you don't have to do anything except zip up your own code. Here I will show how to do this with one code file. In a later post I will show how to zip up if you are using other libraries, but basically you just have to copy the package folder into your zip file. You can see the AWS guide here. This is easier if you have a virtualenv (you should be using that anyway).

I zipped up my single code file. Like this:

Now I start up the Ubuntu sub-system, because this is the current year and Microsoft <3 Linux, and I run the CLI command. You can see the command reference here.

aws lambda create-function --function-name "s3event" --runtime "python3.6" --role "arn:aws:iam::064719471117:role/service-role/blog" --handler "s3event.lambda_handler" --region us-west-1 --zip-file "fileb:///mnt/c/Users/Marnee/Dropbox/github/serverless-stuff/functions/s3event.zip"

Let's go over the arguments I am using here:

--function-name "s3event"

What do you want to call your function? I called mine s3event.

--runtime "python3.6"

What language and version do you want to use? I'm running python 3.6.

--role "arn:aws:iam::064719471117:role/service-role/blog"

Under what IAM role will the function run? This is the ARN of the role you created to execute your function and has read and write access to S3.

--handler "s3event.lambda_handler"

What function in your code should be executed? This is the starting point of the function execution. You have to use the pattern your lambda function's name.code function's name.

def lambda_handler(event, context):

--region us-west-1

This must be the same region where your S3 buckets live. You can find a list of regions if you scroll down to the Available Regions section here.

--zip-file "fileb:///mnt/c/Users/Marnee/Dropbox/github/serverless-stuff/functions/s3event.zip"

What is the path to your zipped up package? I am using the Ubuntu sub-system but my files are on my Windows disk which is mounted to my Ubuntu machine. Note the pattern fileb:///. You have to use this to define the path to your file.

There are lot of other commands but this will get you going.

Go to the AWS Lambda console and admire the beauty. You should see your new function in the Functions list.

If you need to change some code you can update the code with the CLI like this.

aws lambda update-function-code --function-name "s3event" --zip-file "fileb:///mnt/c/Users/Marnee/Dropbox/github/serverless-stuff/functions/s3event.zip"

Event trigger

The command above does not setup the S3 trigger. You can either use the CLI to do this or the console. I just used the console.

- Go to the Lamba console and select your function.

- Go to the Triggers tab

- Click Add Trigger

- Click the grey dashed box

- Select S3

- Select your bucket

- Select the event type. In this case we want the Object Created All option.

- Check to enable to trigger and then click Submit.

That's it. Now we can test it using the console's test feature or just upload a file. I talk about using the test feature in a previous blog post so let's just try uploading something.

Go to your S3 console and select your source bucket. Go through the steps to upload a file to the bucket. Then go to your target S3 bucket and check for a file. You should see the output file s3event-results.txt because that is the name of the file in the code.

Download the file and look inside. You should see the output.

# this is the output format according to the code

target_message = "The MIME-type is : " + new_file["ContentType"] + ". At " + datetime.datetime.now

# this is the output of the code

The MIME-type is : image/jpg. At 7/16/2017 2:28PM

If you didn't get any output file, I'm sorry! There are some things you can do. Try the test feature. This will give you immediate results in the console. Or you can check the logs.

Where are my logs?

On the AWS Lambda console go to the Monitoring tab. Click the link to view logs in CloudWatch.

If you do not see anything logged then you probably have a broken trigger config. Go to the Triggers tab and make sure you've got that setup correctly and make sure your lambda function and S3 bucket are in the same region. I am not completely sure about this, but I think that when I update code, the trigger stops working for some reason. Try deleting the trigger and setup again.

Try testing the function using the console until you can figure out what is wrong.

See my previous blog post for more information.

If you have any problems or questions tweeting me is best or you can leave a comment here.

Full Stack .NET Programmer and Ham